Applying Prompt Engineering To leverage LLMs Like ChatGPT & Bard

Srikanth Renganathan (VP - Techpearl)

Introduction

Prompt Engineering is a discipline within artificial intelligence (AI) that involves systematic design & optimization of prompts and underlying data structures to guide AI systems towards achieving specific outputs. Prompt engineering involves creating specific prompts or cues to guide the generation of relevant text especially by large language models. It has gained popularity in recent years due to its effectiveness in fine-tuning pre-trained models like ChatGpt and Bard to generate more accurate and relevant responses.

The role of a prompt engineer is to create effective prompts or cues that guide the language model’s generation of text. This involves proving the right element to the LLM including instructions, context, input and output formats etc that trigger the desired response from the model. The prompt engineer works closely with developers and data scientists in a collaborative way to get the desired output from LLMs.

Using Hyperparameters to tune the response:

A prompt engineer tunes the hyper-parameters based on the requirement at hand.

Typical hyper-parameters in LLMs and ways to tune it.

- Temperature: Temperature is a hyperparameter that controls the randomness of the output. A higher temperature will result in more creative and diverse outputs, while a lower temperature will result in more accurate and predictable outputs.

- Top-K tokens:Top-K tokens is a hyperparameter that controls the number of tokens that are considered when generating an output. A higher value will result in more detailed and informative outputs, while a lower value will result in shorter and more concise outputs.

- Embeddings: Embeddings are the representations of words and phrases that are used by the LLM. You can tune the embeddings by changing the size of the embedding layer, the type of embedding, and the training data that is used to train the embeddings.

- Architecture: The architecture of the LLM can also be tuned. This includes the number of layers, the number of neurons per layer, and the type of activation function.

Example of tuning “temperature” hyper parameter:

To access and change hyperparameters like Temperature in ChatGPT or Bard, you can use the prompt_args parameter.

For example, to set the temperature to 0.7, you would use the following code:

Code snippet

response = chatGPT(prompt=”Write me a poem about love.”, prompt_args={“temperature”: 0.7})

- Temperature = 0.5: This will result in outputs that are similar to the training data.

- Temperature = 1.0: This will result in outputs that are more creative and diverse.

- Temperature = 2.0: This will result in outputs that are very creative and may not be very accurate.

You can also use the prompt_args parameter to change other hyperparameters, such as top-k tokens, beam width, and length penalty.

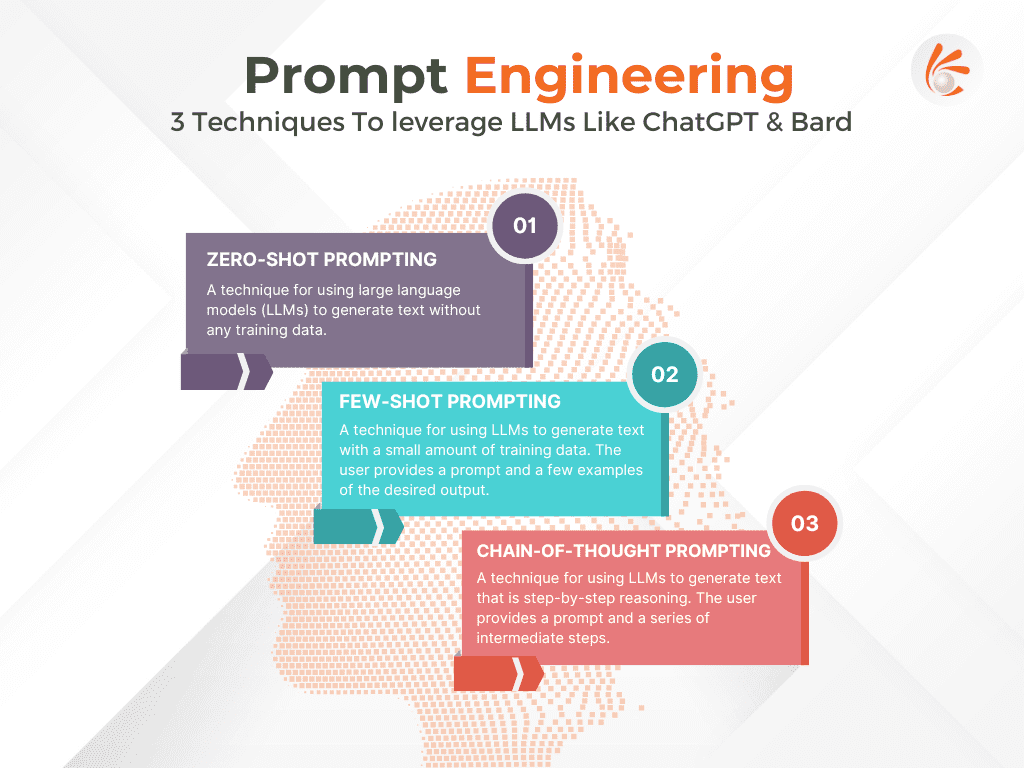

Prompting Techniques

Zero-shot prompting is a technique for using large language models (LLMs) to generate text without any training data. In zero-shot prompting, the user provides a prompt, which is a short piece of text that describes the desired output.

For example, the user might provide the prompt “Write a poem about love.” The LLM would then generate a poem about love.

Zero-shot prompting is a powerful technique that can be used for a variety of tasks, such as generating creative text, writing different kinds of creative content, and answering your questions in an informative way, even if they are open ended, challenging, or strange.

Few-shot prompting is a technique for using LLMs to generate text with a small amount of training data. In few-shot prompting, the user provides a prompt and a few examples of the desired output. The LLM then generates text that is consistent with the prompt and the examples.

For example, the user might provide the prompt “Write a poem about love” and a few examples of poems about love. The LLM would then generate a poem about love that is similar to the examples.

Few-shot prompting is a more powerful technique than zero-shot prompting, as it allows the user to provide the LLM with some guidance on the desired output.

Chain-of-thought prompting is a technique for using LLMs to generate text that is step-by-step reasoning. In chain-of-thought prompting, the user provides a prompt and a series of intermediate steps. The LLM then generates text that explains the intermediate steps and arrives at the final answer.

For example, the user might provide the prompt “How do I calculate the area of a triangle?” and a series of intermediate steps, such as “Find the length of the base” and “Find the height.” The LLM would then generate text that explains how to calculate the area of a triangle, step-by-step.

Chain-of-thought prompting is a powerful technique for using LLMs to solve problems that require step-by-step reasoning.

Technical examples of how these techniques can be applied

- Zero-shot prompting: A user might use zero-shot prompting to generate a poem about a specific topic, such as “Write a poem about the ocean.”

- Few-shot prompting: The user would provide the LLM with the prompt “Summarize this news article” and a few examples of summaries of news articles.

- Chain-of-thought prompting: The user would provide the LLM with the prompt “Solve this math problem” and a series of intermediate steps. The LLM would then generate text that explains how to solve the math problem, step-by-step.

Using Prompt Engineering For Software Development lifecycle

Large language models (LLMs) can be used in various phases of the code development lifecycle.

Requirements gathering

In the requirements gathering phase, LLMs can be used to generate natural language descriptions of the system to be developed by asking stakeholders questions about their needs. For example, an LLM could be asked to generate a description of a new e-commerce website by asking questions such as:

- What products will be sold on the website?

- How will customers be able to purchase products?

- How will customers be able to track their orders?

The LLM can then use this information to generate a high-level overview of the system, including its features, functionality, and user interface. This information can then be used to create a more detailed requirements document, which will be used to guide the development of the system.

Design

In the design phase, LLMs can be used to generate design snippets that implement the requirements. For example, an LLM could be asked to generate design snippets that implements a search feature for an e-commerce website by asking questions such as:

- How should the search results be displayed?

- How should the search results be sorted?

- How should the search results be filtered?

Implementation

In the implementation phase, LLMs can be used to generate code that is consistent with the design. For example, an LLM could be asked to generate code that implements a login feature for an e-commerce website by asking questions such as:

- How should users be authenticated?

- How should users be able to reset their passwords?

- How should users be able to change their passwords?

The LLM can then use this information to generate code that implements the login feature, including the user interface, the database queries, and the security checks.

Code-refactor example

Consider the following code:

Python

def calculate_area(length, width):

“””Calculates the area of a rectangle.

Args:

length: The length of the rectangle.

width: The width of the rectangle.

Returns:

The area of the rectangle.

“””

if length < 0 or width < 0:

raise ValueError(“The length and width must be positive.”)

return length * width

This code is perfectly functional, but it could be improved by refactoring it to use functions that are more specific to the task at hand. For example, we could create a function called is_positive() to check if a number is positive, and then use this function to check if the length and width are positive. We could then use this function to calculate the area of the rectangle, as follows:

Python

def is_positive(number):

“””Checks if a number is positive.

Args:

number: The number to check.

Returns:

True if the number is positive, False otherwise.

“””

return number > 0

def calculate_area(length, width):

“””Calculates the area of a rectangle.

Args:

length: The length of the rectangle.

width: The width of the rectangle.

Returns:

The area of the rectangle.

“””

if not is_positive(length) or not is_positive(width):

raise ValueError(“The length and width must be positive.”)

return length * width

This code is more readable and easier to maintain, because it uses functions that are more specific to the task at hand.

Testing

In the testing phase, LLMs can be used to generate test cases that exercise the code. For example, an LLM could be asked to generate test cases for a login feature for an e-commerce website by asking questions such as:

- What happens if a user enters an incorrect username?

- What happens if a user enters an incorrect password?

- What happens if a user forgets their password?

Deployment

In the deployment phase, LLMs can be used to generate documentation and scripts that automate the deployment process. For example, an LLM could be asked to generate documentation and scripts that deploy an e-commerce website to a cloud environment by asking questions such as:

- What are the requirements for the cloud environment?

- What are the steps involved in deploying the website to the cloud environment?

- What are the post-deployment tasks that need to be performed?

The LLM can then use this information to generate documentation and scripts that automate the deployment process. This can help to ensure that the system is deployed correctly and efficiently.

LLMs can be a valuable tool for developers at all stages of the code development lifecycle. By automating tasks and providing insights, LLMs can help developers save time and improve the quality of their code.

Summary

Prompt engineering is a powerful technique that can be used to improve the performance of LLMs on a variety of tasks. By providing the LLM with a specific prompt, the prompt engineer can guide the model’s generation of text and improve the accuracy and relevance of the output.