Developing Conversational AI applications by harnessing the power of LLMs

Sreekanth Reddy

Introduction

The power of conversational AI has become a transformational force in a world where technology continues to alter how people live, work, and communicate. Imagine a computer being that can converse with us in a human-like manner, comprehend our wants, and provide insightful answers. In this blog, we embark on a journey into this transformative field by sharing our experience in developing a chatbot that seamlessly combines the power of Langchain with the natural language processing abilities of OpenAI’s GPT-3.5.

Chatbots are quite useful for addressing client inquiries regarding the company or the website. We can utilize the capabilities of large language models like GPT-3.5 to offer replies based on the data we provide.

Large Language Model (LLM)

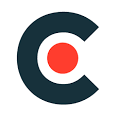

A large language model (LLM) is an advanced AI system that has been trained on large amounts of text data and is capable of understanding and generating human-like text. LLMs are based on the transformer-based architecture, which was first introduced in the paper “Attention is all you need“.

The Transformer – model architecture (from the paper “attention is all you need”).

LLMs perform so well in responding to user inquiries in a friendly and understandable manner because they are capable of natural language processing and have been well trained on a large corpus of data.

Exploring popular LLMs

Here is a list of a few popular LLMs.

- GPT-4

- GPT-3.5

- BERT

- Cohere

- Anthropic

- LaMDA, etc.

The GPT-4 surpasses all of these models, but it takes a little longer to respond. Instead, we may utilize GPT-3.5, which is the fastest model available from openAI and has been trained using 175 billion parameters. It is also great for engaging in conversation.

Training Approaches

Since the language model we choose is conversant, it cannot respond to queries on our private data and documents. To do this, we must train the model to deliver responses based on the data we provide.

We talk about two approaches we can take to get the LLM to respond to queries on our data.

- Fine tuning

- Retrieval-augmented generation (RAG)

Fine Tuning

A model can be “fine-tuned” by changing its parameters in order to perform better at a particular job. That is, as we feed the data to the model, it fine-tunes its parameters by adjusting them to generate the response based on the data we feed it.

Training data must be a JSONL document, with each line containing a prompt-completion pair matching a training example. See the sample below for a general idea of what training data should look like.

Template: {“prompt”: “<prompt text or question>”, “completion”: “<response for prompt>”}

Example: {“prompt”: “what is 1+1”, “completion”: “2”}.

Retrieval-augmented generation (RAG)

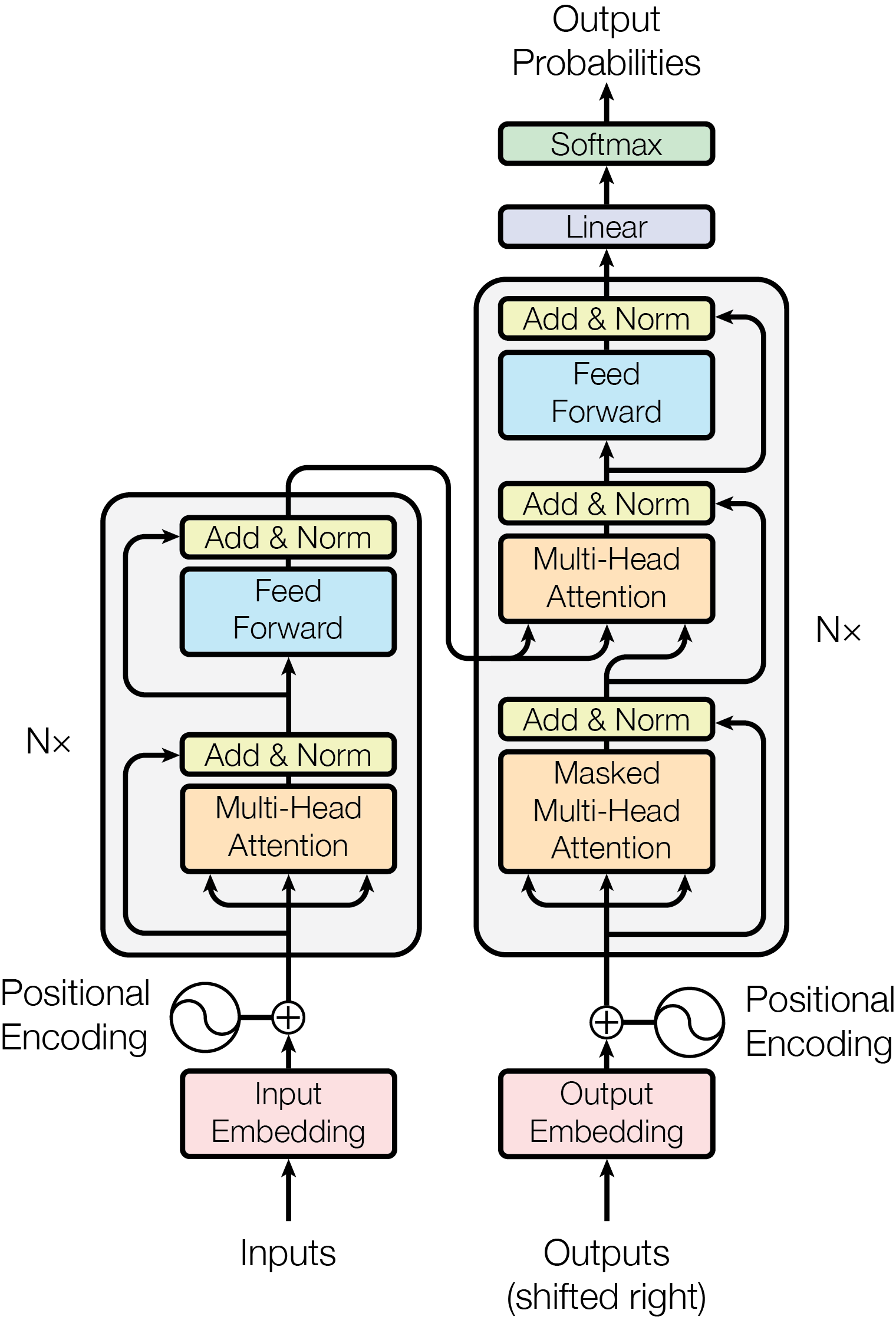

RAG is a machine learning framework that combines retrieval-based and generation-based approaches to improve the performance of language models in generating coherent and contextually relevant text. In the RAG framework, we utilize a retriever to choose relevant documents or passages from a huge corpus of text depending on a specific query or context. And the generator is typically a language model, such as GPT-3.5, that takes the retrieved information and generates human-like text based on that context.

The power of RAG

Fine-tuning is a time-consuming process that requires a lot of work to process the training data, and even then, it might not work well. It also requires a large amount of training data.

The RAG implementation will always make sure that the model produces a response depending on the current context and the information that has been retrieved. This means that the generated text will be more relevant and accurate, as it takes into account the most up-to-date information available. RAG further gives users more control over the generated text by enabling them to provide certain prompts or restrictions that will direct the model’s output.

The RAG framework requires a series of processes to be completed, and using Langchain for these activities makes the procedure simpler.

Streamlining with Langchain

LangChain is a freely accessible toolkit designed for crafting applications driven by language models, accessible in both Python and JavaScript. What makes LangChain stand out is its innovative use of chains, allowing the amalgamation of multiple steps into a singular function, thereby reducing the coding effort required from developers.

Handling Data

Data can be in a variety of forms. As a first step in the RAG framework, we have to retrieve the text depending on a specific query or context. In order to do this, we must extract textual information from different kinds of documents and store it in a way that is easy to retrieve. We first extract the text data from documents using LangChain document loaders and then store it as objects that each include pageContent (the document’s content) and document metadata. Each object represents a single document.

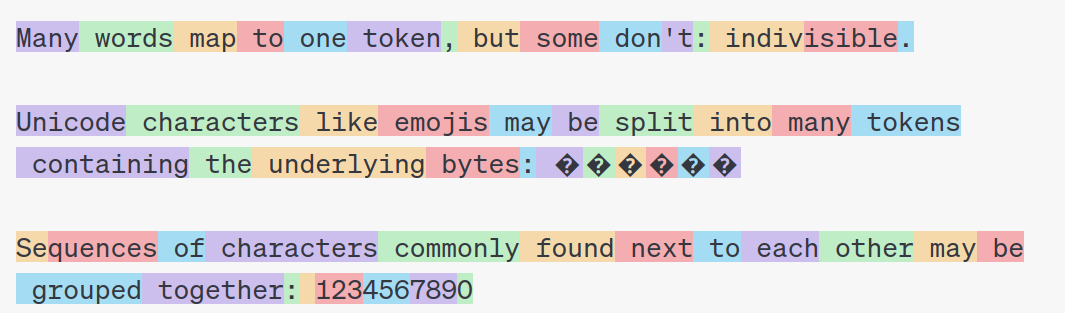

Overcoming Token Limitations

One of the main limitations of the RAG framework is that LLMs won’t accept huge amounts of data at once. Let us dive deeper into this topic. GPT-3.5, accepts text data of size up to 4096 tokens. Tokens are the smallest units of text; they can be character, word, or subword units. Look at the below image for a better visual understanding.

The objects we just created include different amounts of text data, so there is a possibility that when we get any object that has information relevant to a given query, it will definitely go over the token limit. For this, we split the text data into smaller objects containing smaller chunks of text data using a few measures that are appropriate for our needs. The chunk size is the first thing we need to decide.

Optimizing Chunk Sizes

The chunk size is nothing but the character limit for each object. As was previously mentioned, GPT-3.5’s maximum token limit is 4,096 tokens. However, as a good rule of thumb, we set a maximum of 2000 tokens for retrieved information and 2000 tokens for user queries and past conversations (more on this later).

Retrieving one text object provides a large amount of information to LLM, but in this case we are missing the diversity of information from various other documents, so we retrieve four different objects for each query to ensure a broader range of information. By retrieving multiple text objects, we can enhance the model’s understanding and provide a more comprehensive response to user queries. This approach allows GPT-3.5 to consider different perspectives and gather insights from a wider pool of sources, resulting in more accurate and well-rounded answers; accordingly, each chunk should be limited to 500 tokens.

Let’s assume that we can obtain 400 words or so for every 500 tokens. Additionally, a word may have an average of four characters. We may set the chunk size to 4 x 400 = 1600 or 1500 characters, and the chunk overlap to 160 or 150 characters. This indicates that each chunk will share 150 or 160 characters with the chunk before it and the chunk after it. This guarantees that no significant information is omitted and enables a seamless transition between objects in the same document. With this method, we can make sure that LLM receives a variety of data from different documents while still keeping the chunk size modest.

Once we’ve established an acceptable chunk size, we can’t just split the text after the first 1500 characters. We must consider the context in order to ensure that the split occurs at a logical and meaningful point in the text. This will help to maintain the data’s integrity and coherence when it is being processed by LLM. As a result, we employ the RecursiveCharacterTextSplitter provided by langchain, which repeatedly tries to divide the text into manageable pieces using the list of separators in sequence. The default separators are [“nn”, “n”, “”, “”]. This has the result of attempting to keep all paragraphs (and then sentences, and eventually words) together for as long as possible because those would ordinarily appear to be the strongest semantically connected text fragments.

Harnessing Embeddings for Semantic Understanding

Embeddings are vector representations of words or phrases that capture their semantic meaning. They are commonly used in natural language processing tasks such as information retrieval, image recognition, recommender systems, and many more. Embeddings can be generated using pre-trained models such as BERT, RoBERTa, OpenAI’s text-embedding-ada-002 model, and so on. These pre-trained models are trained on large amounts of text data and can capture the contextual meaning of words and sentences. Each word or sentence is represented as a numerical vector, with similar words or sentences clustered together in vector space. This allows us to compare semantic similarities between words or sentences and can help us with performing a similarity search and retrieving the most similar documents that are close to the user query.

We use OpenAI’s text-embedding-ada-002 model for the creation of embeddings.

Unlocking the Potential of Vector Stores

Vector stores or vector databases are tools for storing and effectively searching for high-dimensional vectors, like numerical vectors representing words or sentences. These databases are designed to handle large amounts of data and perform fast similarity searches, making them useful for applications like natural language processing and information retrieval. By organizing the vectors in a structured manner, vector databases enable efficient storage and retrieval of similar documents, improving the accuracy and speed of search results.

Many databases, like ChromaDB, Pinecone, and libraries like FAISS, provide features specifically tailored for vector search. These databases and libraries offer indexing techniques that optimize the storage and retrieval of high-dimensional vectors, allowing for efficient similarity searches. Additionally, they often include advanced algorithms and models that enable more sophisticated operations such as clustering or nearest neighbor search.

Exploring FAISS for a Similarity Search

FAISS, or Facebook AI Similarity Search, is an open-source library developed by Facebook AI Research. FAISS is designed to efficiently handle large-scale vector datasets and provides various indexing methods, including IVF (Inverted File) and HNSW (Hierarchical Navigable Small World). These indexing methods allow for faster search and retrieval of similar vectors, making FAISS suitable for applications such as text retrieval or image search. Additionally, FAISS supports GPU acceleration, enabling even faster computation for similarity search tasks.

The user query or context is converted into an embedding vector using the same model used before. This embedding vector is then compared to the vectors in the dataset using FAISS, which calculates the similarity scores between them. The top-k most similar vectors can be retrieved and used to provide context for the LLM model, which can then generate responses or make predictions.

Enhancing conversational memory

Now that the documents have been turned into embedding vectors and are ready to send information to the LLM, we can begin zero-shot prompting. However, LLM lacks memory of previous interactions; thus, we must add memory so that the chatbot can respond based on previous discussions in order to be more conversational. Due to token restrictions, we are unable to transfer the complete memory into the prompt as the dialogue increases, so we employ an effective approach to conveying this information. In order to capture historical context, we combine buffer window memory with summary memory.

- BufferWindowMemory: It keeps track of K sets of recent chats. It is beneficial to respond to the follow-up questions.

- Summary: It takes the full discussion and generates a summary of it. It is helpful to acquire a summary of previous chats that BufferWindowMemory cannot recall.

Note: Using summary memory slows down the bot’s response because it has to summarize and generate the text, so if you need your bot to respond quickly, avoid using summary memory and only use buffer window memory.

Designing effective prompt templates

A “prompt” is a piece of information or a question that is given to the bot as input. It serves as the starting point for the bot’s response. The prompt can be a specific query, a request for information, or any other type of input that the bot needs to generate a relevant and coherent response. The quality and specificity of the prompt greatly influence the accuracy and relevance of the bot’s response. Therefore, it is important to provide clear and concise prompts to ensure effective communication with the bot. In our case, the prompt should be constructed so that it must produce an answer from the provided collection of documents; if the answer cannot be found in the documents, it must respond with “I don’t know” or a similar phrase and never with an answer drawn from its own knowledge base. We must also provide a memory for the bot so that it can refer back to previous interactions and maintain context in the conversation. Finally, it should receive human input.

Example template:

PROMPT_TEMPLATE:

You’re an AI assistant having a conversation with a human; the scenario is that you have to respond to the human based solely on the information present in the documents below and queries related to the documents only; if you can’t find the answer in the provided documents, simply say “Sorry, I don’t have information on that” and don’t try to make up an answer in any case.

Documents: {context}

Conversation Summary: {conversation_summary}

Recent conversation: {recent_conversation}

Human input: {question}

The items enclosed in flower brackets are the prompt’s input variables, which are later replaced with the appropriate text when passed into the chain.

Simplifying Response Generation with Chains

The final step is to generate a response based on the input and the documents retrieved. To generate the response, we can use the OpenAI GPT-3.5 model, and for each query, we must retrieve similar documents from the vector store, take the previous conversation from memory, build a prompt, and provide it to the LLM to generate the response. The conversation chain from langchain simplifies the process by automating the retrieval of similar documents, incorporating previous conversation history, and creating a prompt. This allows for a seamless generation of responses using the OpenAI GPT-3.5 model. By utilizing this chain, we can efficiently handle multiple queries and provide accurate and relevant responses.

Conclusion

In conclusion, the combination of ChatBot with OpenAI’s GPT-3.5 constitutes an important turning point in the growth of conversational AI. We’ve unlocked the potential of chatbots that deliver meaningful and context-aware replies by using large language models (LLMs). We’ve set the road for more interesting and productive interactions, ultimately redefining conversational AI with the use of embeddings, libraries like FAISS, and powerful prompt templates. Natural language understanding and generation have significantly improved as a result of these developments in conversational AI. Chatbots are increasingly useful tools for a variety of applications, including customer assistance, virtual assistants, and content production.